Origins of Electronic Sounds

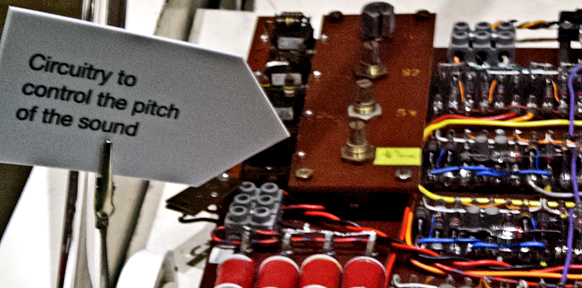

THE VOLTAGE-CONTROLLED ANALOG SYNTHESIZER

Peter Manning noted “The ability to draw the dynamic shaping of pitched events not only allows a readily assimilated audio-visual correlation of specifications, it also overcomes the rigid attack and decay characteristics of electronic envelope shapers”.

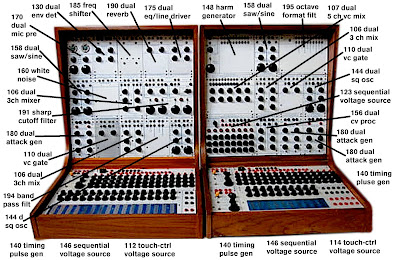

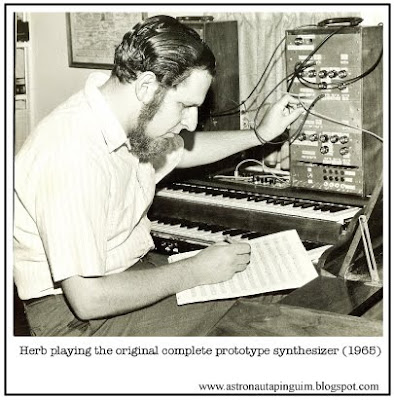

“Our idea was to build the black box that would be a palette for composers in their homes. It would be their studio.The idea was to design it so that it was like an analog computer. It was not a musical instrument but it was modular...It was a collection of modules of voltage-controlled envelope generators and it had sequencers in it right off the bat...It was a collection of modules that you would put together. There were no two systems the same until CBS bought it...Our goal was that it should be under $400 for the entire instrument and we came very close. That’s why the original instrument I fundraised for was under $500.

“I would say that philosophically the prime difference in our approaches was that I separated sound and structure and he didn’t. Control voltages were interchangeable with audio. The advantage of that is that he required only one kind of connector and that modules could serve more than one purpose. There were several drawbacks to that kind of general approach, one of them being that a module designed to work in the structural domain at the same time as the audio domain has to make compromises. DC offset doesn’t make any difference in the sound domain but it makes a big difference in the structural domain, whereas harmonic distortion makes very little difference in the control area but it can be very significant in the audio areas. You also have a matter of just being able to discern what’s happening in a system by looking at it. If you have a very complex patch, it’s nice to be able to tell what aspect of the patch is the structural part of the music versus what is the signal path and so on. There’s a big difference in whether you deal with linear versus exponential functions at the control level and that was a very inhibiting factor in Moog’s more general approach.

"Uncertainty is the basis for alot of my work. One always operates somewhere between the totally predictable and the totally unpredictable and to me the “source of uncertainty,” as we called it, was a way of aiding the composer. The predictabilities could be highly defined or you could have a sequence of totally random numbers. We had voltage control of the randomness and of the rate of change so that you could randomize the rate of change. In this way you could make patterns that were of more interest than patterns that are totally random.”

While the early Buchla instruments contained many of the same modular functions as the Moog, it also contained a number of unique devices such as its random control voltage sources, sequencers and voltage-controlled spatial panners. Buchla has maintained his unique design philosphy over the intervening years producing a series of highly advanced instruments often incorporating hybrid digital circuitry and unique control interfaces.

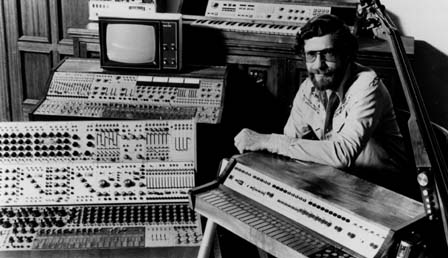

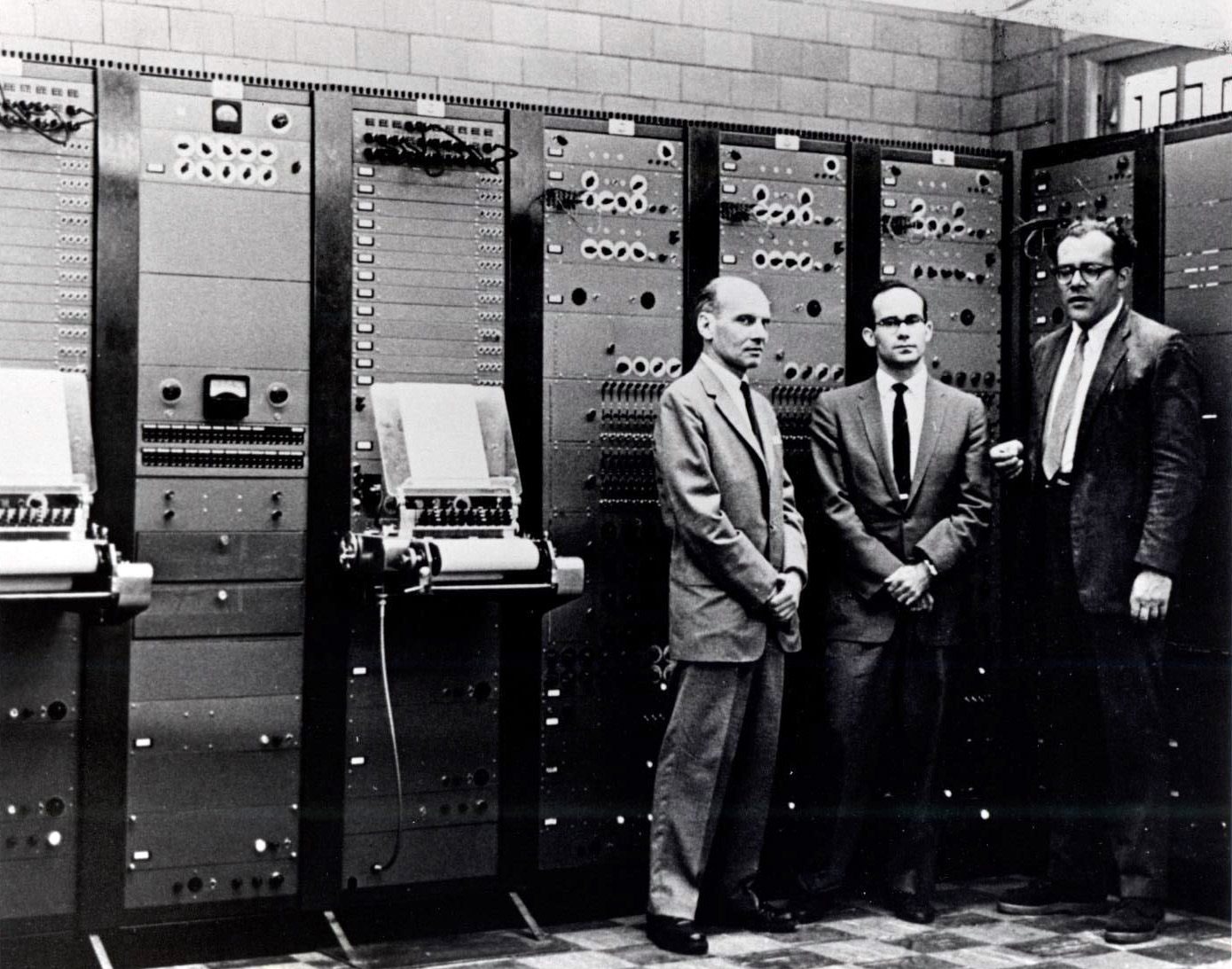

The Moog synthesizer

Interest in the new instrument was immediate and Moog began making modular synthesizers for experimental composers and the academic community. Widespread awareness of Moog's name came when the synthesizer features in a number of commercially successful albums. Today, the Moog synthesizer has proven its indispensability through its widespread acceptance. Moog synthesizers are in use in hundreds of studios maintained by universities, recording companies, and private composers throughout the world. Dozens of successful recordings, film scores, and concert pieces have been realized on Moog synthesizers. The basic synthesizer concept as developed by R.A. Moog, Inc., as well as a large number of technological innovations, have literally revolutionized the contemporary musical scene, and have been instrumental in bringing electronic music into the mainstream of popular listening.

The Minimoog

In 1970, Robert Moog produced another ground breaking instrument, the Minimoog. Unlike previous synthesizers, the Minimoog abandoned the modular design in favor of all the electronics being built into a single keyboard unit. What was sacrificed in terms of modular flexibility was gained in ease of use and portability.

The result is an instrument which is applicable to studio composition as much as to live performance, to elementary and high school music education as much as to university instruction, to the demands of commercial music as much as to the needs of the experimental avant garde. The Mini Moog offers a truly unique combination of versatility, playability, convenience, and reliability at an eminently reasonable price.”

The Synth Wars

After these initial efforts a number of other American designers and manufacturers followed the lead of Buchla and Moog. One of the most successful was the ARP SYNTHESIZER built by Tonus, Inc. with design innovations by the team of Dennis Colin and David Friend. The studio version of the ARP was introduced in 1970 and basically imitated modular features of the Moog and Buchla instruments.

In Europe the major manufacturer was undoubtedly EMS, a British company founded by its chief designer Peter Zinovieff. EMS built the Synthi 100, a large integrated system which introduced a matrix-pinboard patching system, and a small portable synthesizer based on similar design principles initially called the Putney but later modified into the SYNTHI A or Portabella. This later instrument became very popular with a number of composers who used it in live performance situations.

Polyphonic synthesizers

Until the mid-1970s most synthesizers were monophonic – that is to say they were only capable of producing one note at once. A few exceptions, including Moog's Sonic Six and the ARP Odyssey, were duophonic (able to play two notes at once). True polyphonic instruments, able to play chords, appeared in 1975 in the form of the Polymoog, with the equally classic Yamaha C5-80 and the Oberheim Four-Voice being released the following year.Analog Versus Digital

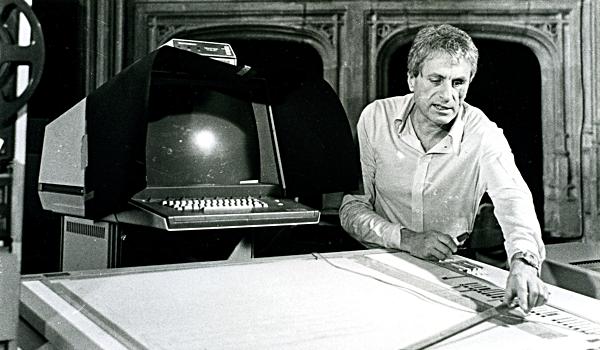

COMPUTER MUSIC

The early vision of why computers should be applied to music was elegantly expressed by the scientist Heinz Von Foerster: “Accepting the possibilities of extensions in sounds and scales, how do we determine the new rules of synchronism and succession?

"It is at this point, where the complexity of the problem appears to get out of hand, that computers come to our assistance, not merely as ancillary tools but as essential components in the complex process of generating auditory signals that fulfill a variety of new principles of a generalized aesthetics and are not confined to conventional methods of sound generation by a given set of musical instruments or scales nor to a given set of rules of synchronism and succession based upon these very instruments and scales. The search for those new principles, algorithms, and values is, of course, in itself symbolic for our times.”

By the end of the 1960’s computer sound synthesis research saw a large number of new programs in operation at a variety of academic and private institutions. The demands of the medium however were still quite tedious and, regardless of the increased sophistication in control, remained a tape medium as its final product. Some composershad taken the initial steps towards using the computer for realtime performance by linking thepowerful control functions of the digital computer to the sound generators and modifiers of the analog synthesizer. We will deal with the specifics of this development in the next section. From its earliest days the use of the computer in music can be divided into two fairly distinct categories even though these categories have been blurred in some compositions: 1) those composers interested in using the computer predominantly as a compositional device to generate structural relationships that could not be imagined otherwise and 2) the use of the computer to generate new synthetic waveforms and timbres.

The Digital Synthesizer Devolution

MIDI

CONCLUSION

.jpg/640px-Casio_CZ_velo_%26_ENV_chart_(CZ-1).jpg)